[ad_1]

In recent months, Israeli Facebook users began stumbling across a new group with a familiar name: “Mothers Cook Together.”

With thousands of members and recipes filling its feed, the group looked indistinguishable from the wildly popular Israeli cooking forum of the same name. But this was no kitchen-table community. It was bait.

According to a recent Haaretz investigation, the group was part of a sophisticated foreign influence operation using AI-generated content to infiltrate Israeli social media communities. Pages with titles like “A Blessing a Day” and “Psalms – A Daily Chapter” were designed to draw in conservative and religious Israelis.

Once followers engaged, operators funneled them into WhatsApp groups that harvested personal data and could later pivot into political messaging.

The source of the campaign has yet to be definitively identified, though researchers point to possible involvement by China, Russia, or Iran, based on patterns seen in similar operations elsewhere.

Researchers have drawn comparisons to a similar campaign that took place in Romania last year, in which a nearly identical network began with prayer groups and inspirational content before abruptly transforming into a pro-Russian political campaign on the eve of elections.

An internal investigation into the alleged TikTok campaign led Romania’s Constitutional Court to annul election results that had put a far-right candidate running as an independent in the lead ahead of a runoff.

A child helps his father casts his ballot at a polling station in Bacu village on May 4, 2025 during the first round of the presidential elections in Romania. (Mihai Barbu / AFP)

Analysts fear Israel could be headed down a similar path ahead of its 2026 national elections.

Meta removed the groups shortly after Haaretz published its findings. But the takedowns highlight a recurring problem: by the time campaigns are exposed, much of the damage may already have been done. Thousands of Israelis had already joined or engaged with the pages, their data collected and their feeds influenced.

AI fuels social media manipulation

Launched between April and May, the pages included in the campaign churned out an endless stream of posts tailored to conservative, traditional, and nationalist Israelis — from cliché Shabbat greetings to uncanny AI-generated images of IDF soldiers, Israeli farmers, and supposed war casualties. Some even depicted fake funerals of fallen troops, exploiting the Gaza war to stir emotion and engagement.

Private investigators traced the accounts behind these pages to fake profiles linked to foreign numbers and previous influence operations, including several that appeared to have migrated from campaigns in Eastern Europe.

According to Lt. Col. (res.) David Siman-Tov, deputy head of the Institute for the Research of the Methodology of Intelligence at the Israeli Intelligence Community Commemoration and Heritage Center, foreign influence campaigns designed to mobilize public sentiment are nothing new — but the rise of artificial intelligence has made them far harder to detect.

The OpenAI logo is seen on a cellphone in front of a computer screen displaying the ChatGPT home screen, in Boston, on March 17, 2023. (Michael Dwyer/AP)

“The method of influencing the masses today is through ChatGPT,” Siman-Tov told The Times of Israel, describing AI platforms as “training wheels” for the actors behind such operations.

He explained that AI makes meddling easier on every level — from mass-producing and distributing content to polishing its appearance. Where telltale signs of manipulation once included awkward phrasing or grammatical errors, he said, generative tools now produce text and imagery that appear convincingly authentic to the untrained eye.

Fertile ground for manipulation

According to an analysis published by DataReportal, a free online data reference library, some three-quarters of Israelis had social media accounts as of January 2025. Among adults, usage was nearly universal, it found.

That ubiquity, combined with deep domestic political polarization and the international criticism over the war in Gaza, makes Israel a prime target for foreign influence campaigns. And the tools of manipulation are only growing sharper.

Israeli Soldier Surrenders

“Half of Israel is gone, We surrender, Iran please stop this destruction”#TelAviv #IsraeliranWar #IsraelTerroristStatepic.twitter.com/X7a1FA2ZhG

— Daddy X (@Daddy_TwiXter) June 16, 2025

A September study by the Israel Internet Association found that one in five war-related disinformation items fact-checked during the Israel-Iran conflict was generated with AI. Videos showed fabricated clips of surrendering IDF soldiers or fake anti-war protests. Images were even more saturated: 41% of false visuals were AI-generated, “highlighting how synthetic content has quickly become a central weapon in information warfare,” the paper reported.

History repeats itself

Foreign actors have tried to meddle in Israel’s politics before. A joint study by the Institute for National Security Studies and the Institute for the Research of the Methodology of Intelligence revealed that ahead of the 2022 Knesset elections, multiple covert campaigns unfolded on social media.

One sought to fracture the Religious Zionism party, using dozens of fake Twitter accounts posing as Israelis to urge far-right political leader Itamar Ben Gvir to end his Otzma Yehudit party’s joint electoral run with Religious Zionism.

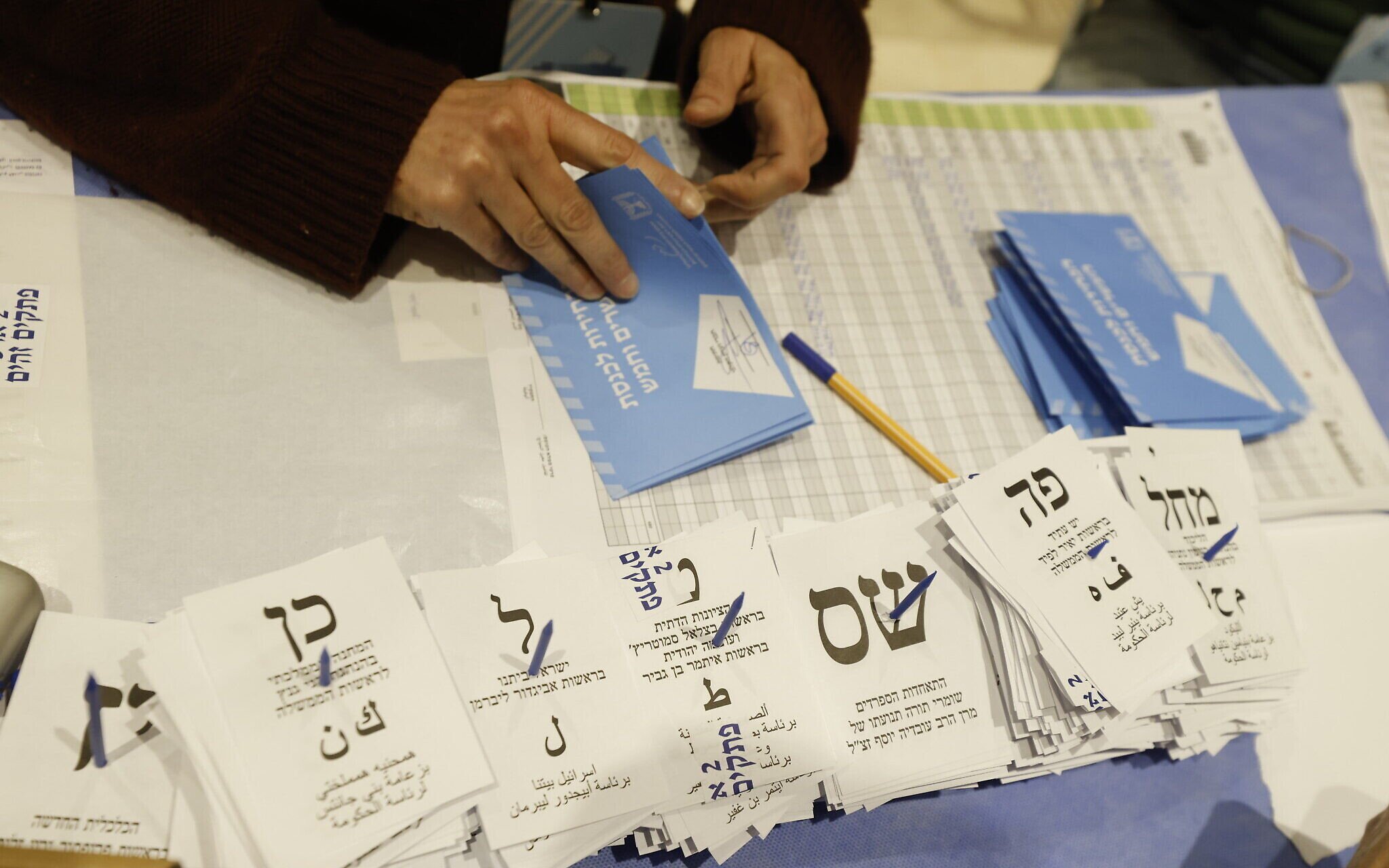

Central Election Committee workers count the final ballots in the Knesset on November 3, 2022 (Olivier Fitoussi/Flash90)

Another campaign aimed to suppress voter turnout, coordinating hundreds of accounts — including some posting in Arabic — days before and on election day.

After the vote, a small network of accounts across Facebook, Twitter, Instagram, and Telegram sought to sow distrust in the results, spreading claims of election fraud and mobilizing protests, supported by thousands of fake followers to amplify credibility.

‘A war on ideas’

Siman-Tov, who is also a senior researcher at INSS, warned that these campaigns are not isolated incidents but part of a wider battlefield.

“These campaigns are part of the war on ideas… At the end of the day, everyone wants to achieve a level of influence in which people will think a certain way,” he explained.

Lt. Col. (res.) David Siman-Tov, a senior researcher at the Institute for National Security Studies (INSS) and deputy head of the Institute for the Research of the Methodology of Intelligence (IRMI) at the Israeli Intelligence Community Commemoration and Heritage Center (Chen Galili/INSS)

Unlike traditional psychological warfare, which can usually be traced back to a known adversary, anonymous campaigns present a more complex challenge. Siman-Tov explained that the key distinction lies in intent: when the source of a campaign is clear, so are its objectives. But when the perpetrator is unknown, such as in the campaign exposed by Haaretz, their goals — whether intimidation, manipulation, or disinformation — remain obscured, making the campaign far harder to counter.

Religious and ideological identities are a recurring target, but Siman-Tov cautioned against assuming vulnerability lies with any single group.

He referenced the ISNAD network, which uses fake Israeli profiles to amplify despair, criticism of the government, and mistrust of state institutions — a strategy it intensified during the Israel-Iran conflict in June to target liberal Israelis.

“They want to increase the opposition against the war, so they single out the left,” he said of the campaign, the INSS alleges is aligned with Hamas.

Falling through the cracks

Despite the repeated campaigns seeking to interfere with Israel’s democratic processes, Siman-Tov argued that the country is lagging behind the West in its response.

“In Israel, there still isn’t a government decision to deal with foreign influence campaigns… There is no single body that is tasked to deal with it. This whole issue sort of falls through the cracks,” he said.

While agencies like the Shin Bet and the National Cyber Directorate respond to specific cases — such as recent reports of Israelis receiving recorded calls in Hebrew attempting to recruit them to spy for Iran — Siman-Tov said these tend to be intimidation campaigns rather than attempts at covert influence. The structural gap lies in addressing more ambiguous, anonymous campaigns.

Illustrative: A woman holds her cell phone with an Israeli flag cover at the Mahane Yehuda Market in Jerusalem, February 23, 2024. (Chaim Goldberg/Flash90)

Social media platforms themselves have taken uneven — and at times contradictory — steps. In January, Meta dismantled its third-party fact-checking program, shifting responsibility for verifying information to user-written “Community Notes,” similar to X.

Yet just months later, in July, the company reported removing 10 million fake accounts since the start of 2025 — a move that underscored the scale of misinformation challenges it had simultaneously scaled back efforts to address. Meta did not respond to a request for comment on the matter.

Unlike in the European Union, where the Digital Services Act (DSA) and related regulations compel platforms to take responsibility for online content, Israel has no comparable framework. Under the DSA, major social media companies must identify and mitigate systemic risks such as disinformation, allow external audits and researcher access, and can face heavy fines for noncompliance. The EU also enforces transparency rules on political advertising and restricts foreign-funded campaigns near elections — measures designed to protect democratic integrity in the digital space.

European flags in front of the Berlaymont building, headquarters of the European Commission in Brussels (Jorisvo, iStock by Getty Images)

Ultimately, combating influence operations requires both systemic and individual defenses. For governments, Siman-Tov stressed, regulation and coordination are key. For citizens, skepticism must become second nature.

“You need to be suspicious of all the content on your feed. Before even asking if it’s AI-generated, first ask yourself if it makes sense,” he said, recalling how a friend once sent him a doctored image of Syrian President Ahmed Al-Sharaa embracing a woman — a scene he instantly recognized as implausible. “That’s the level of skepticism people need to cultivate.”

[ad_2]

Source link